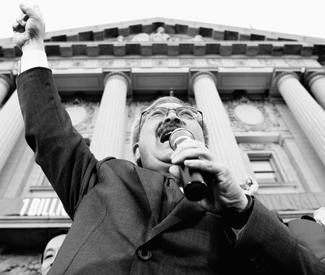

There was a poll conducted in late November by the University of San Francisco, the results of which were released in conjunction with the San Francisco Chronicle, claiming that 73 percent of San Franciscans approve of Mayor Ed Lee’s performance.

It didn’t take long for Lee’s supporters to begin touting the figure as fact; soon after the poll appeared on SFGate.com on Dec. 9, the results wallpapered the comment section of the Guardian’s website as the answer to any criticism of Mayor Lee, his policies, or the city’s eviction and gentrification crises.

After all, it was a big number that seems to suggest widespread support. But closer analysis shows this “online poll” wasn’t really a credible poll, and that number is almost certainly way over-inflated. [Editor’s update 1/13: The authors of this survey contest the conclusions of this article, and we have changed the word “bogus” in the original headline to “flawed.” The issue of the reliability of opt-in online surveys is an evolving one, so while we stand by our conclusions in this article that the 73 percent approval figure is misleading and difficult to support, we urge you to read Professor Corey Cook’s response here and our discussion of this issue in this week’s Guardian.]

The problems with the USF “poll” are numerous, but the most glaring of those issues has to do with its lack of random selection. According to the New York Times Style Guide, a poll holds value in what’s called a “probability sample,” or the notion that it represents the beliefs of the larger citizenry.

The USF poll registered responses from 553 San Franciscans. That number itself isn’t the issue, or it wouldn’t be if those 553 individuals were procured through a random process. But they weren’t, and it wasn’t even close.

The survey participants were obtained via an “opt-in” list that, according to David Latterman — a USF professor, co-conductor of the poll, and downtown-friendly political consultant — meaning that anyone who participated in this particular poll had previously stated they were willing to participate in a poll. This phenomenon is known as self-selecting.

“We work with a rather large national firm and they have a whole series of opt-in panels,” Latterman told the Guardian. “So they’ve got lists of thousands of people who have basically said, ‘Yes, we’ll take a poll.’ And the blasts go out to these groups of people.”

That means that even prior to conducting the poll, results had already been tailored toward a certain set of citizens and away from anything that could be classified as “random.” And even the Chronicle acknowledged in the small type that “Poll respondents were more likely to be homeowners,” further narrowing the field down to one-third of city residents, and generally its most affuent third.

Even if pollsters could match the demographics of the polled with the “true demographics” as Latterman called them, it still wouldn’t address the issue of self-selection. But that’s not all: The list of “opt-in” participants, which was acquired through a third party vendor, according to Latterman, only contained English-speaking registered voters. And anyone contacted was contacted via email, another red flag in the world of accurate of polling data.

Interestingly, the USF “poll” also found that 86 percent of respondants said that lack of affordability was a major issue in the city, while 49.6 percent of that same group considered housing developers to be most at fault for the astronomical real estate prices. So, to recap: This poll, touted by many people as gospel in the comment section of this site, found that while the City is totally unaffordable, the man in charge of the City is barely culpable for that situation, and he remains incredibly popular.

According to the NYT Style Guide, “Any survey that relies on the ability and/or availability of respondents to access the Web and choose whether to participate is not representative and therefore not reliable.”

Uh oh.

Russell D. Renka, professor of Political Science at Southeast Missouri State, conveyed far stronger feelings on the matter in his paper “The Good, the Bad, and the Ugly of Public Opinion Polling,” saying that a self-selected sample “trashes the principle of random selection… A proper medical experiment never permits someone to choose whether to receive a medication rather than the placebo.”

Strike two.

He then writes, “Any self-selected sample is basically worthless as a source of information about the population beyond itself.”

Strike three.

So then why were such frowned-upon methods used in this poll?

Latterman attributes the tactics to many things, but mostly to the rapidly changing technological landscape of San Francisco, coupled with the high costs of alternative methods and a large renters market.

“San Francisco is a more difficult model,” Latterman said. “So Internet polling has to get better, because phone polling has gotten really expensive.”

But even if Internet polling needs to improve, it is still important to prominently note that in original source material, lest you give folks the wrong ideas. Or even just misinformed ones. Unless what you’re trying to present is less about polling that trying to sell San Franciscans on the idea that Mayor Lee enjoys widespread support.